K#8. You're not a Tech Data Scientist if you don't master this skill

#8. Introduction to experimentation – one of the pillars of data science

Heyyy there 👋

Welcome back to K’s DataLadder ✨! Each week, I share a story from my life as a Tech Data Scientist to help you level up in your career.

We’ve grown to an amazing community of 963 curious and driven data lovers (soon to be 1000 waouuu). Thank you for making this journey count ❤️

This week, we’re diving into one of the key skills all tech data scientists must master, and as you know it, I’m not talking about machine learning.

A few months ago, I wrote an article about the 6 most crucial skills for tech data scientists. That article got 35k views, so I figured it’s time to address more deeply these core skills, starting with the trickiest yet most crucial one:

🔥EXPERIMENTATION🔥

Why most crucial? Because we do it every week.

Spotify is famous for being super product-oriented. We’re always launching new features, which means we’re always A/B testing.

There’s no escaping it, so I’ve been massively developing this skill and I’m taking you with me on this learning journey! I’m lucky to learn directly from top experts, and I’m sharing all the insider insights.

It’s a complex topic, so I’ll break it down into several editions. I’ll teach you everything I’ve learned about experimentation at Spotify. So stay tuned if you want to boost your chances of landing a job in big tech!

Reading Time: 5 minutes

Agenda:

Life checkpoint

This week’s story

Basics of experimentation

Why we bother experimenting

But first, if you haven’t done that already, you can:

Life Checkpoint – What am I up to?

(feel free to skip if you don’t give two cents about my life, I won’t be upset 🥲)

I often read content creators talk about how it’s important to “build in public” – basically share your progress as you go.

And I’ve been doing that. Well kind of.

I mostly share the positives on LinkedIn, because Idk how to talk about the negative without sounding like I’m whining. But I mean I have my fair share of struggles.

LinkedIn feels too open-space for me, so I decided to share my ups and downs here.

So I’ve been particularly stressed and overwhelmed lately. I also have a chronic illness that worsens when I’m tense, so it doesn’t help either.

Honestly guys, I love creating content but no one told me how much effort it takes to get things off the ground. The reality is it feels like I’m juggling two full-time jobs.

Plus, I’m not great at managing it all.

I’m a big disciple of quality over quantity—that’s what’s helped me grow so fast in only one year. But as a result, I spend a lot of time iterating over my content. That’s why I’ve been announcing a YouTube video launch for weeks now, but there’s still no video out lol.

I basically spent weeks scripting, filming, editing, only to be completely dissatisfied with the result and deleting the whole shit. I don’t see the point in pushing boring or redundant content. I derive no satisfaction or pride in that.

Idk how others manage this but I’m praying for the 4-days week to hit us ASAP 🙏

This Week’s Story

In January, I started working on the TV experience of the Spotify app. It’s a great place to be in because we’re launching a lot of features.

And who says lots of feature releases, says lots of experimentation !

A couple of editions ago, I mentioned that one of the reasons I didn’t get promoted was because I lacked experience with experimentation. Now, I have a fantastic opportunity to upskill thanks to all the features we’re rolling out.

But before we release them, we need to test them first. So this brings us to this week’s topic. Unlike ML, experimentation is sure and quick—we always get insights at the end, whether it be about the features, our users or our methods.

The Basics of Experimentation

1. What are we even talking about?

Experimentation is a statistical approach that helps us isolate and evaluate the impact of product changes – launching features, UX updates and all!

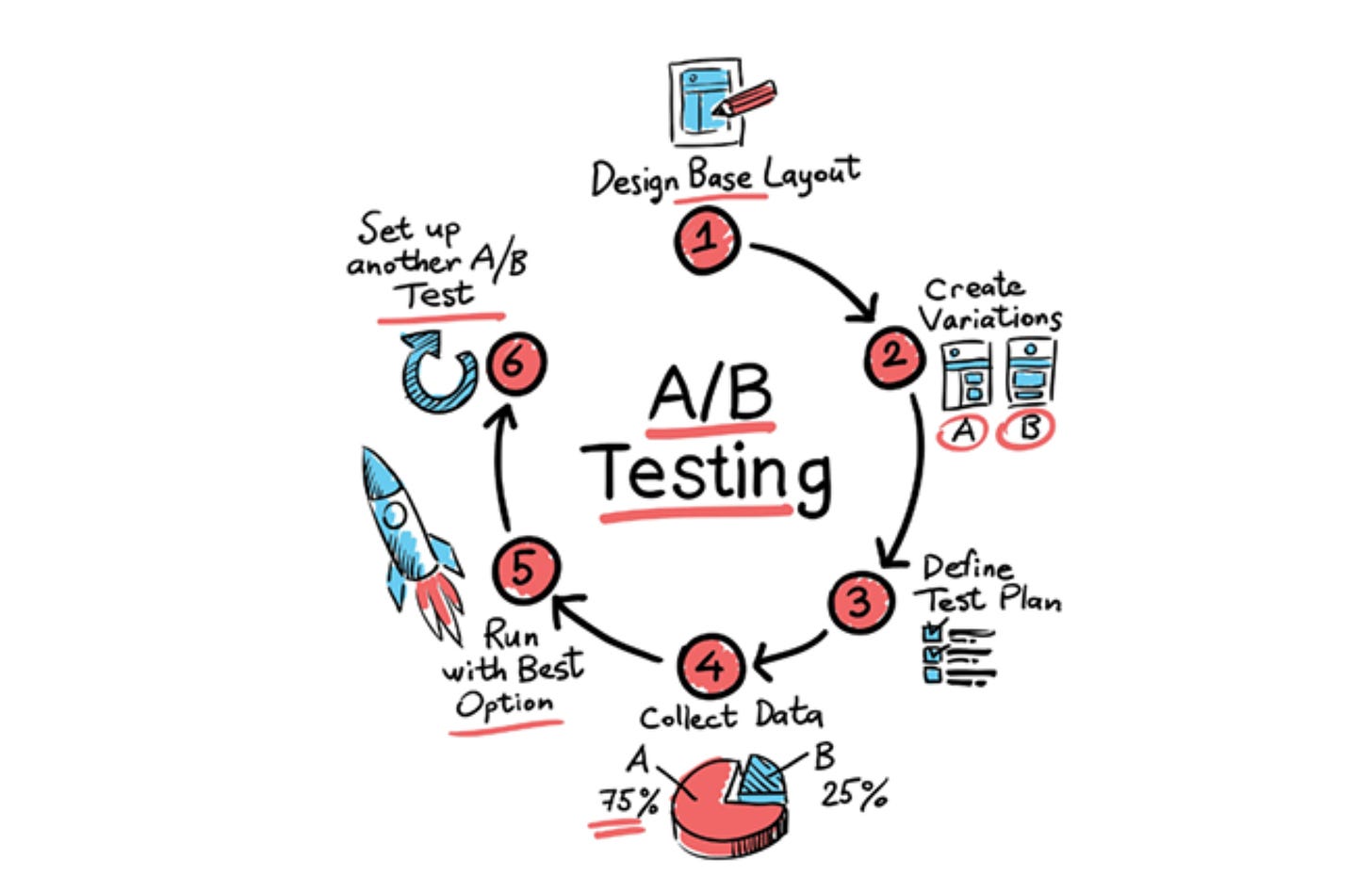

You’ve certainly heard of A/B testing, right?

Well A/B testing is one of the two main methods used in product experimentation, the other one being Feature Rollouts.

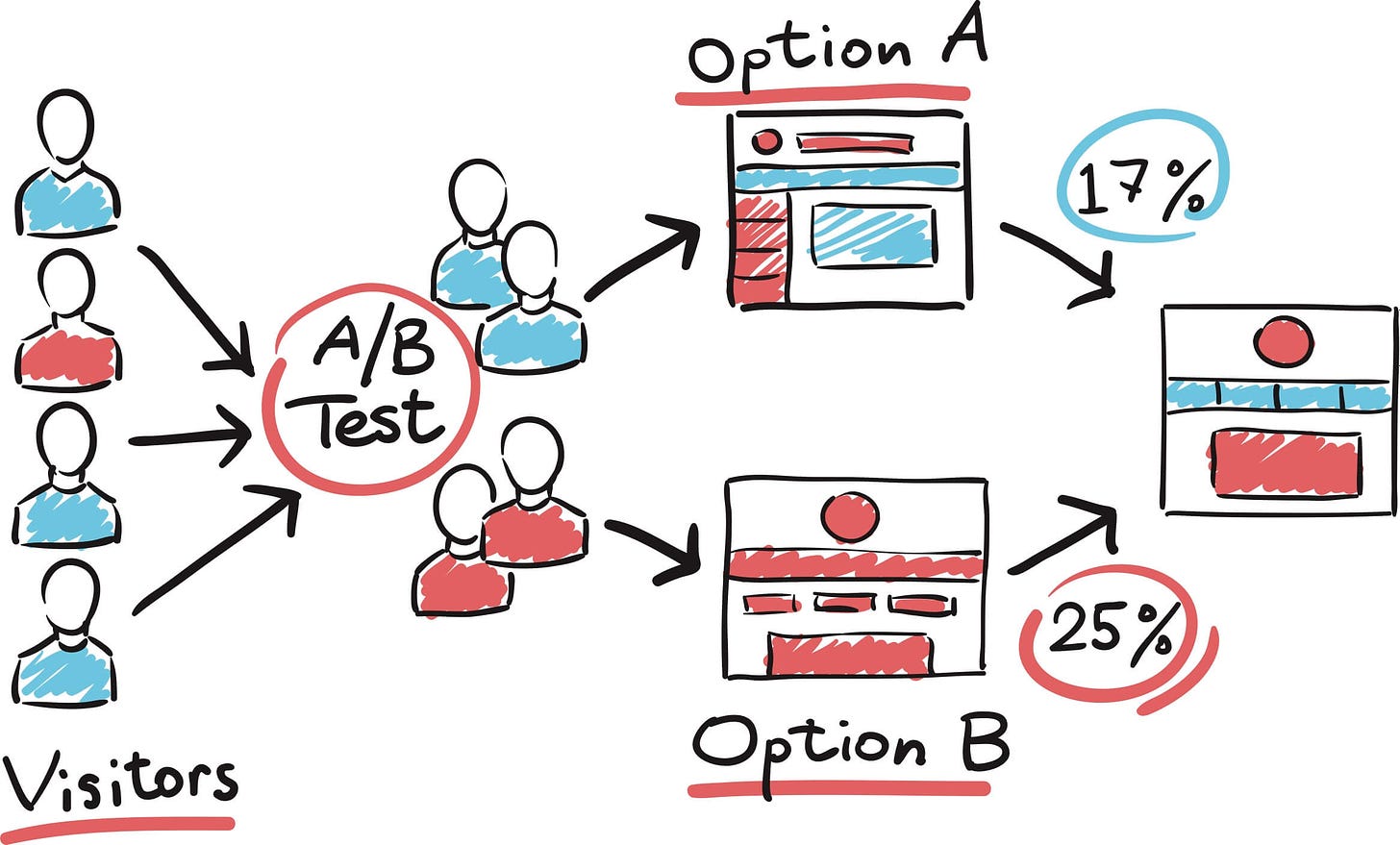

It's essentially about Hypothesis Testing; a concept we all studied in statistics. In hypothesis testing:

You start with a question or a hypothesis that you want to test “will adding playlist shortcuts in the home screen increase user engagement?”.

To test this hypothesis, you make a change (add the shortcuts).

Then compare the outcome (user engagement) between two groups: one that experienced the change (treatment group) and one that didn't (control group).

2. The Scientific Method (+ legit example)

Experimentation follows the same scientific method:

Observation: Identify an area of interest, a user pain point or a problem to solve.

Question: Formulate a specific question or hypothesis based on the observation.

Hypothesis: Develop a hypothesis that predicts the outcome of the change.

Experiment: Design & conduct an experiment to test the hypothesis.

Analysis: Collect & analyse the data to see if the results support the hypothesis.

Conclusion: Draw conclusions & make decisions based on the findings.

Once it’s done, we move on to the next problem, and we do this process all over again.

Example: Is it worth adding lyrics to the Spotify app?

Disclaimer: I don’t share any confidential information from my workplace here (you know I don’t want to get fired 😇)

Hypothesis: Displaying lyrics will increase user engagement and retention.

Observation: Users enjoy singing along with songs and often look up lyrics on external websites.

Question: Will adding lyrics to songs on Spotify increase the time users spend on the app, increase user satisfaction and reduce churn rates?

Hypothesis: We believe that providing lyrics alongside audio content will lead to increased session durations, engagement and higher user retention.

Experiment:

Control group: Users have access to audio content without lyrics.

Treatment Group: Users have access to audio content with lyrics displayed.

Metrics: Average session duration, daily active users (DAUs), and churn rates.

Analysis: Compare the metrics between the control group and the treatment group to see if the addition of lyrics leads to a significant increase in user engagement and retention over 4 weeks, and 1 week prior to the exposure date.

Conclusion: If the treatment group shows higher engagement and retention, this supports the hypothesis that adding lyrics improves user experience. Spotify can then decide to implement the lyrics feature for all users.

Experimentation is a collaborative process involving multiple stakeholders:

Product Managers (PMs) & Data Scientists drive product changes and formulate the hypotheses.

Engineers & Designers implement the changes and design the experiment.

Data Scientists define the metrics, analyse the results, and present the statistical insights to inform decision-making.

This process helps make sure that any changes we make are based on data and statistical evidence, which reduces the risk of making the wrong product decisions.

Why do we bother experimenting?

Because we don’t want to waste time launching the wrong products or messing things up. More specifically, we do it to:

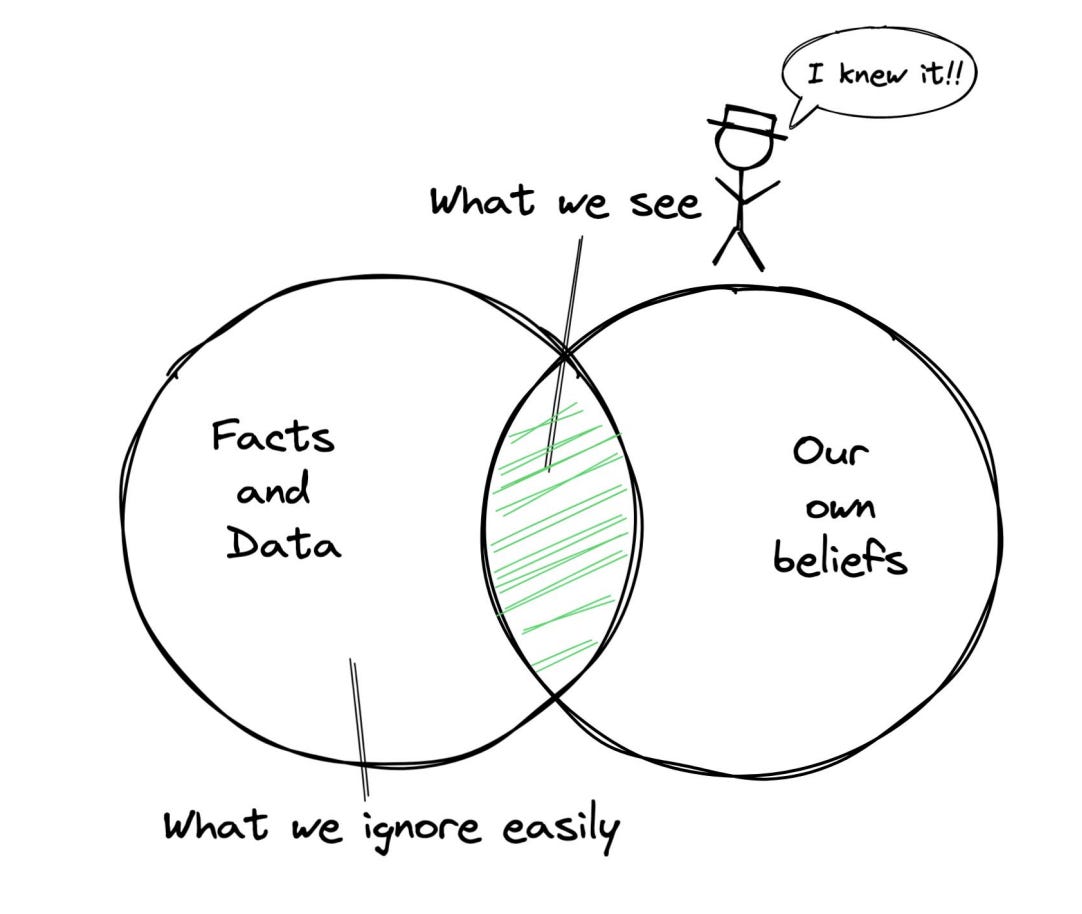

1. Objectively test our assumptions:

As humans, we have inherent biases. We tend to look for evidence that supports what we already believe (confirmation bias). To counteract this, we need to test our assumptions objectively, and hypothesis testing is a great tool for that.

We also have the IKEA effect, where we overvalue the products we create ourselves. Experimentation helps us see the true value of our product changes.

Example:

Hypothesis: Integrating a new “Mood-based Playlist” feature will significantly enhance user satisfaction and increase daily active users.

Bias: PMs & Designers might overestimate the appeal and effectiveness of this feature based on personal enthusiasm and selective anecdotal feedback.

Experiment: Implement the mood-based playlist for a sample of users and compare their satisfaction ratings and daily active user metrics with those of a control group. This helps objectively assess the feature’s impact using real user data, and avoid confirmation bias!

2. Avoid accidentally breaking things:

Every change we make carries the risk of unwanted consequences. For mature products like Spotify or MAANG, it's easier to unintentionally degrade user experience when we try to improve it. Experimentation helps us catch these issues early!

Example:

Hypothesis: Tweaking the recommendation algorithm will improve music playback quality.

Risk: The new algorithm might inadvertently increase buffering times.

Experiment: Roll out the new algorithm to a small user segment and monitor playback quality and buffering times. This helps identify any negative impacts before a full-scale release.

3. Ditch bad ideas early:

It’s not about shipping a lot of features but shipping the right ones. Experimentation allows us to test ideas in real-world settings and avoid investing in changes that don't actually improve user experience.

Example:

Hypothesis: A new social sharing feature will increase user engagement.

Experiment: Implement the feature for a subset of users and measure engagement metrics like shares per user and session length. If data shows no significant improvement or even a decrease in engagement, we can abandon the feature early, and save time and resources.

4. Establish causal impact:

To find out if a product change causes an outcome, we need to isolate its impact from other factors. Experimentation allows us to establish a causal link between a change and its effect.

Example:

Hypothesis: Displaying song lyrics will increase user session duration.

Experiment: Introduce lyrics for a random sample of users and compare their session duration with a control group that doesn’t have lyrics. By isolating this variable, we can establish whether the presence of lyrics directly causes users to spend more time on the app.

I’ll stop now because it’s already too long but think of this post as an intro to experimentation!

Next week, I’ll tell you more about the two different types of experimentation: A/B tests & Feature Rollouts, the differences, the advantages and most importantly when to use one or the other (super important!).

If you liked this edition, please leave a ❤️ or a comment to let me know! Until then, see you next week for more data stories 🫶

If you’re enjoying these insights, don’t forget to subscribe and follow along on YouTube, Instagram & LinkedIn for more updates and stories.

Quality over quantity. A/b testing framework with relevant examples. Thank u for the efforts. Take care of your health. Excited for feature rollout article